A judge in Washington state has blocked video evidence that’s been “AI-enhanced” from being submitted in a triple murder trial. And that’s a good thing, given the fact that too many people seem to think applying an AI filter can give them access to secret visual data.

“Your honor, the evidence shows quite clearly that the defendent was holding a weapon with his third arm.”

If you ever encountered an AI hallucinating stuff that just does not exist at all, you know how bad the idea of AI enhanced evidence actually is.

Everyone uses the word “hallucinate” when describing visual AI because it’s normie-friendly and cool sounding, but the results are a product of math. Very complex math, yes, but computers aren’t taking drugs and randomly pooping out images because computers can’t do anything truly random.

You know what else uses math? Basically every image modification algorithm, including resizing. I wonder how this judge would feel about viewing a 720p video on a 4k courtroom TV because “hallucination” takes place in that case too.

There is a huge difference between interpolating pixels and inserting whole objects into pictures.

Both insert pixels that didn’t exist before, so where do we draw the line of how much of that is acceptable?

Look it this way: If you have an unreadable licence plate because of low resolution, interpolating won’t make it readable (as long as we didn’t switch to a CSI universe). An AI, on the other hand, could just “invent” (I know, I know, normy speak in your eyes) a readable one.

You will draw yourself the line when you get your first ticket for speeding, when it wasn’t your car.

Interesting example, because tickets issued by automated cameras aren’t enforced in most places in the US. You can safely ignore those tickets and the police won’t do anything about it because they know how faulty these systems are and most of the cameras are owned by private companies anyway.

“Readable” is a subjective matter of interpretation, so again, I’m confused on how exactly you’re distinguishing good & pure fictional pixels from bad & evil fictional pixels

Being tickets enforced or not doesn’t change my argumentation nor invalidates it.

You are acting stubborn and childish. Everything there was to say has been said. If you still think you are right, do it, as you are not able or willing to understand. Let me be clear: I think you are trolling and I’m not in any mood to participate in this anymore.

Sorry, it’s just that I work in a field where making distinctions is based on math and/or logic, while you’re making a distinction between AI- and non-AI-based image interpolation based on opinion and subjective observation

You can safely ignore those tickets and the police won’t do anything

Wait what? No.

It’s entirely possible if you ignore the ticket, a human might review it and find there’s insufficient evidence. But if, for example, you ran a red light and they have a photo that shows your number plate and your face… then you don’t want to ignore that ticket. And they generally take multiple photos, so even if the one you received on the ticket doesn’t identify you, that doesn’t mean you’re safe.

When automated infringement systems were brand new the cameras were low quality / poorly installed / didn’t gather evidence necessary to win a court challenge… getting tickets overturned was so easy they didn’t even bother taking it to court. But it’s not that easy now, they have picked up their game and are continuing to improve the technology.

Also - if you claim someone else was driving your car, and then they prove in court that you were driving… congratulations, your slap on the wrist fine is now a much more serious matter.

normie-friendly

Whenever people say things like this, I wonder why that person thinks they’re so much better than everyone else.

Tangentially related: the more people seem to support AI all the things the less it turns out they understand it.

I work in the field. I had to explain to a CIO that his beloved “ChatPPT” was just autocomplete. He become enraged. We implemented a 2015 chatbot instead, he got his bonus.

We have reached the winter of my discontent. Modern life is rubbish.

Normie, layman… as you’ve pointed out, it’s difficult to use these words without sounding condescending (which I didn’t mean to be). The media using words like “hallucinate” to describe linear algebra is necessary because most people just don’t know enough math to understand the fundamentals of deep learning - which is completely fine, people can’t know everything and everyone has their own specialties. But any time you simplify science so that it can be digestible by the masses, you lose critical information in the process, which can sometimes be harmfully misleading.

LLMs (the models that “hallucinate” is most often used in conjunction with) are not Deep Learning normie.

https://en.m.wikipedia.org/wiki/Large_language_model

LLMs are artificial neural networks

https://en.m.wikipedia.org/wiki/Neural_network_(machine_learning)

A network is typically called a deep neural network if it has at least 2 hidden layers

I’m not going to bother arguing with you but for anyone reading this: the poster above is making a bad faith semantic argument.

In the strictest technical terms AI, ML and Deep Learning are district, and they have specific applications.

This insufferable asshat is arguing that since they all use fuel, fire and air they are all engines. Which’s isn’t wrong but it’s also not the argument we are having.

@OP good day.

When you want to cite sources like me instead of making personal attacks, I’ll be here 🙂

computers aren’t taking drugs and randomly pooping out images

Sure, no drugs involved, but they are running a statistically proven random number generator and using that (along with non-random data) to generate the image.

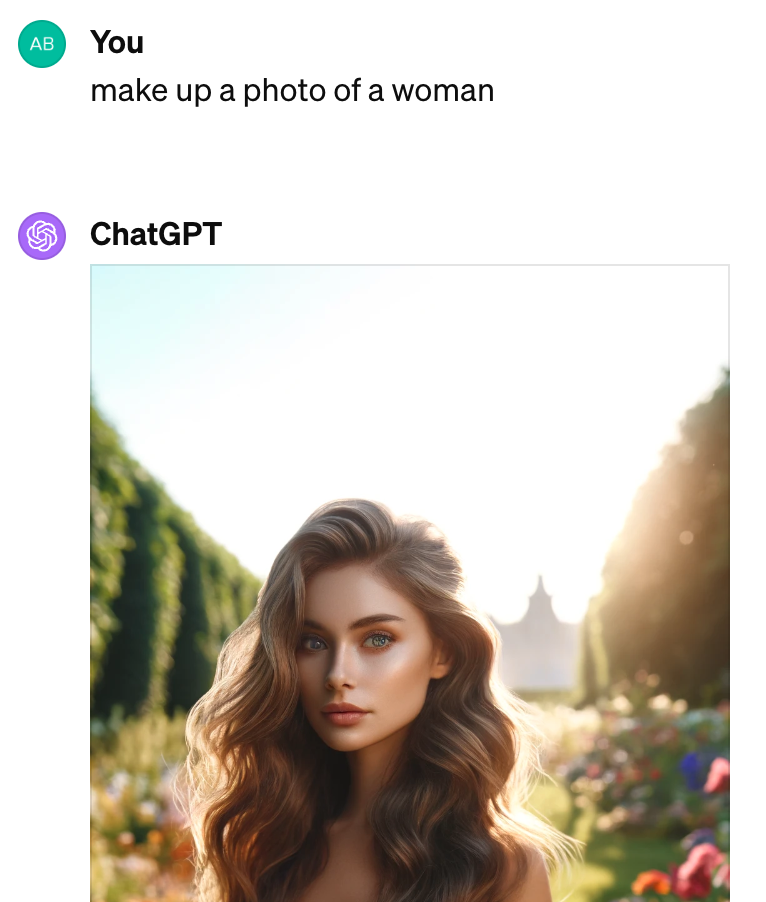

The result is this - ask for the same image, get two different images — similar, but clearly not the same person - sisters or cousins perhaps… but nowhere near usable as evidence in court:

Tell me you don’t know shit about AI without telling me you don’t know shit. You can easily reproduce the exact same image by defining the starting seed and constraining the network to a specific sequence of operations.

computers can’t do anything truly random.

Technically incorrect - computers can be supplied with sources of entropy, so while it’s true that they will produce the same output given identical inputs, it is in practice quite possible to ensure that they do not receive identical inputs if you don’t want them to.

IIRC there was a random number generator website where the machine was hookup up to a potato or some shit.

Bud, hallucinate is a perfect term for the shit AI creates because it doesnt understand reality, regardless if math is creating that hallucination or not

It’s not AI, it’s PISS. Plagiarized information synthesis software.

Just like us!

You know what else uses math? Tripping on acid. From the chemistry used to creat it, to the fractals you see while on it, LSD is math.

Except for the important part of how LSD affects people. Can you point me to the math that precisely describes how human consciousness (not just the brain) reacts to LSD? Because I can point you to the math that precisely describes how interpolation and deep learning works.

No computer algorithm can accurately reconstruct data that was never there in the first place.

Ever.

This is an ironclad law, just like the speed of light and the acceleration of gravity. No new technology, no clever tricks, no buzzwords, no software will ever be able to do this.

Ever.

If the data was not there, anything created to fill it in is by its very nature not actually reality. This includes digital zoom, pixel interpolation, movement interpolation, and AI upscaling. It preemptively also includes any other future technology that aims to try the same thing, regardless of what it’s called.

One little correction, digital zoom is not something that belongs on that list. It’s essentially just cropping the image. That said, “enhanced” digital zoom I agree should be on that list.

Are you saying CSI lied to me?

Even CSI: Miami?

Hold up. Digital zoom is, in all the cases I’m currently aware of, just cropping the available data. That’s not reconstruction, it’s just losing data.

Otherwise, yep, I’m with you there.

See this follow up:

https://lemmy.world/comment/9061929

Digital zoom makes the image bigger but without adding any detail (because it can’t). People somehow still think this will allow you to see small details that were not captured in the original image.

Also since companies are adding AI to everything, sometimes when you think you’re just doing a digital zoom you’re actually getting AI upscaling.

There was a court case not long ago where the prosecution wasn’t allowed to pinch-to-zoom evidence photos on an iPad for the jury, because the zoom algorithm creates new information that wasn’t there.

Digital zoom is just cropping and enlarging. You’re not actually changing any of the data. There may be enhancement applied to the enlarged image afterwards but that’s a separate process.

But the fact remains that digital zoom cannot create details that were invisible in the first place due to the distance from the camera to the subject. Modern implementations of digital zoom always use some manner of interpolation algorithm, even if it’s just a simple linear blur from one pixel to the next.

The problem is not in how a digital zoom works, it’s on how people think it works but doesn’t. A lot of people (i.e. [l]users, ordinary non-technical people) still labor under the impression that digital zoom somehow makes the picture “closer” to the subject and can enlarge or reveal details that were not detectable in the original photo, which is a notion we need to excise from people’s heads.

It preemptively also includes any other future technology that aims to try the same thing

No it doesn’t. For example you can, with compute power, for distortions introduced by camera lenses/sensors/etc and drastically increase image quality. For example this photo of pluto was taken from 7,800 miles away - click the link for a version of the image that hasn’t been resized/compressed by lemmy:

The unprocessed image would look nothing at all like that. There’s a lot more data in an image than you can see with the naked eye, and algorithms can extract/highlight the data. That’s obviously not what a generative ai algorithm does, those should never be used, but there are other algorithms which are appropriate.

The reality is every modern photo is heavily processed - look at this example by a wedding photographer, even with a professional camera and excellent lighting the raw image on the left (where all the camera processing features are disabled) looks like garbage compared to exactly the same photo with software processing:

None of your examples are creating new legitimate data from the whole cloth. They’re just making details that were already there visible to the naked eye. We’re not talking about taking a giant image that’s got too many pixels to fit on your display device in one go, and just focusing on a specific portion of it. That’s not the same thing as attempting to interpolate missing image data. In that case the data was there to begin with, it just wasn’t visible due to limitations of the display or the viewer’s retinas.

The original grid of pixels is all of the meaningful data that will ever be extracted from any image (or video, for that matter).

Your wedding photographer’s picture actually throws away color data in the interest of contrast and to make it more appealing to the viewer. When you fiddle with the color channels like that and see all those troughs in the histogram that make it look like a comb? Yeah, all those gaps and spikes are actually original color/contrast data that is being lost. There is less data in the touched up image than the original, technically, and if you are perverse and own a high bit depth display device (I do! I am typing this on a machine with a true 32-bit-per-pixel professional graphics workstation monitor.) you actually can state at it and see the entirety of the detail captured in the raw image before the touchups. A viewer might not think it looks great, but how it looks is irrelevant from the standpoint of data capture.

They talked about algorithms used for correcting lens distortions with their first example. That is absolutely a valid use case and extracts new data by making certain assumptions with certain probabilities. Your newly created law of nature is just your own imagination and is not the prevalent understanding in the scientific community. No, quite the opposite, scientific practice runs exactly counter your statements.

No computer algorithm can accurately reconstruct data that was never there in the first place.

Okay, but what if we’ve got a computer program that can just kinda insert red eyes, joints, and plums of chum smoke on all our suspects?

In my first year of university, we had a fun project to make us get used to physics. One of the projects required filming someone throwing a ball upwards, and then using the footage to get the maximum height the ball reached, and doing some simple calculations to get the initial velocity of the ball (if I recall correctly).

One of the groups that chose that project was having a discussion on a problem they were facing: the ball was clearly moving upwards on one frame, but on the very next frame it was already moving downwards. You couldn’t get the exact apex from any specific frame.

So one of the guys, bless his heart, gave a suggestion: “what if we played the (already filmed) video in slow motion… And then we filmed the video… And we put that one in slow motion as well? Maybe do that a couple of times?”

A friend of mine was in that group and he still makes fun of that moment, to this day, over 10 years later. We were studying applied physics.

That’s wrong. With a degree of certainty, you will always be able to say that this data was likely there. And because existence is all about probabilities, you can expect specific interpolations to be an accurate reconstruction of the data. We do it all the time with resolution upscaling, for example. But of course, from a certain lack of information onward, the predictions become less and less reliable.

By your argument, nothing is ever real, so let’s all jump on a chasm.

There’s a grain of truth to that. Everything you see is filtered by the limitations of your eyes and the post-processing applied by your brain which you can’t turn off. That’s why you don’t see the blind spot on your retinas where your optic nerve joins your eyeball, for instance.

You can argue what objective reality is from within the limitations of human observation in the philosophy department, which is down the hall and to your left. That’s not what we’re talking about, here.

From a computer science standpoint you can absolutely mathematically prove the amount of data that is captured in an image and, like I said, no matter how hard you try you cannot add any more data to it that can be actually guaranteed or proven to reflect reality by blowing it up, interpolating it, or attempting to fill in patterns you (or your computer) think are there. That’s because you cannot prove, no matter how the question or its alleged solution are rephrased, that any details your algorithm adds are actually there in the real world except by taking a higher resolution/closer/better/wider spectrum image of the subject in question to compare. And at that point it’s rendered moot anyway, because you just took a higher res/closer/better/wider/etc. picture that contains the required detail, and the original (and its interpolation) are unnecessary.

You cannot technically prove it, that’s true, but that does not invalidate the interpolated or extrapolated data, because you will be able to have a certain degree of confidence in them, be able to judge their meaningfulness with a specific probability. And that’s enough, because you are never able to 100% prove something in physical sciences. Never. Even our most reliable observations, strongest theories and most accurate measurements all have a degree of uncertainty. Even the information and quantum theories you rest your argument on are unproven and unprovable by your standards, because you cannot get to 100% confidence. So, if you find that there’s enough evidence for the science you base your understanding of reality on, then rationally and by deductive reasoning you will have to accept that the prediction of a machine learning model that extrapolates some data where the probability of validity is just as great as it is for quantum physics must be equally true.

Removed by mod

Unfortunately it does need pointing out. Back when I was in college, professors would need to repeatedly tell their students that the real world forensics don’t work like they do on NCIS. I’m not sure as to how much thing may or may not have changed since then, but based on American literacy levels being what they are, I do not suppose things have changed that much.

you might be referring to the CSI Effect

Its certainly similar in that CSI played a role in forming unrealistic expectations in student’s minds. But. Rather than expecting more physical evidence in order to make a prosecution, the students expected magic to happen on computers and lab work (often faster than physically possible).

AI enhancement is not uncovering hidden visual data, but rather it generates that information based on previously existing training data and shoe horns that in. It certainly could be useful, but it is not real evidence.

ENHANCE !

Yes. When people were in full conspiracy mode on Twitter over Kate Middleton, someone took that grainy pic of her in a car and used AI to “enhance it,” to declare it wasn’t her because her mole was gone. It got so much traction people thought the ai fixed up pic WAS her.

Don’t forget people thinking that scanlines in a news broadcast over Obama’s suit meant that Obama was a HOLOGRAM and ACTUALLY A LIZARD PERSON.

There’s people who still believe in astrology. So, yes.

And people who believe the Earth is flat, and that Bigfoot and the Loch Ness Monster exist, and there are reptillians replacing the British royal family…

People are very good at deluding themselves into all kinds of bullshit. In fact, I posit that they’re better even at it than learning the facts or comprehending empirical reality.

Of course, not everyone is technology literate enough to understand how it works.

That should be the default assumption, that something should be explained so that others understand it and can make better, informed, decisions. .

It’s not only that everyone isn’t technologically literate enough to understand the limits of this technology - the AI companies are actively over-inflating their capabilities in order to attract investors. When the most accessible information about the topic is designed to get non-technically proficient investors on board with your company, of course the general public is going to get an overblown idea of what the technology can do.

Its not actually worse than eyewitness testimony.

This is not an endorsement if AI, just pointing out that truth has no place in a courtroom, and refusing to lie will get you locked in a cafe.

Too good, not fixing it.

According to the evidence, the defendant clearly committed the crime with all 17 of his fingers. His lack of remorse is obvious by the fact that he’s clearly smiling wider than his own face.

AI enhanced = made up.

It’s incredibly obvious when you call the current generation of AI by its full name, generative AI. It’s creating data, that’s what it’s generating.

Everything that is labeled “AI” is made up. It’s all just statistically probable guessing, made by a machine that doesn’t know what it is doing.

Society = made up, so I’m not sure what your argument is.

My argument is that a video camera doesn’t make up video, an ai does.

video camera doesn’t make up video, an ai does.

What’s that even supposed to mean? Do you even know how a camera works? What about an AI?

Yes, I do. Cameras work by detecting light using a charged coupled device or an active pixel sensor (CMOS). Cameras essentially take a series of pictures, which makes a video. They can have camera or lens artifacts (like rolling shutter illusion or lens flare) or compression artifacts (like DCT blocks) depending on how they save the video stream, but they don’t make up data.

Generative AI video upscaling works by essentially guessing (generating) what would be there if the frame were larger. I’m using “guessing” colloquially, since it doesn’t have agency to make a guess. It uses a model that has been trained on real data. What it can’t do is show you what was actually there, just its best guess using its diffusion model. It is literally making up data. Like, that’s not an analogy, it actually is making up data.

Ok, you clearly have no fucking idea what you’re talking about. No, reading a few terms on Wikipedia doesn’t count as “knowing”.

CMOS isn’t the only transducer for cameras - in fact, no one would start the explanation there. Generative AI doesn’t have to be based on diffusion. You’re clearly just repeating words you’ve seen used elsewhere - you are the AI.Yes, I also mentioned CCDs. Charge Coupled Device is what that stands for. You can tell I didn’t look it up, because I originally called it a “charged coupled device” and not a “charge coupled device”. My bad, I should have checked Wikipedia.

Can you point me to a generative AI that doesn’t make up data? GANs are still generative, and generative AIs make up data.

Why not make it a fully AI court and save time if they were going to go that way. It would save so much time and money.

Of course it wouldn’t be very just, but then regular courts aren’t either.

Honestly, an open-source auditable AI Judge/Justice would be preferable to Thomas, Alito, Gorsuch and Barrett any day.

Me, testifying to the AI judge: “Your honor I am I am I am I am I am I am I am I am I am”

AI Judge: “You are you are you are you are you are you…”

Me: Escapes from courthouse while the LLM is stuck in a loop

You’d think it would be obvious you can’t submit doctored evidence and expect it to be upheld in court.

If only it worked that way in practice eh.

We found these marijuanas in his pocket, they were already in evidence bags for us even.

The model that can separate the fact from fiction and falsehood will be worth far more than any model creating fiction and falsehood.

For example, there was a widespread conspiracy theory that Chris Rock was wearing some kind of face pad when he was slapped by Will Smith at the Academy Awards in 2022. The theory started because people started running screenshots of the slap through image upscalers, believing they could get a better look at what was happening.

Sometimes I think, our ancestors shouldn’t have made it out of the ocean.

We do not need AI pulling a George Lucas.

AI can make any blurry criminal look like George Lucas with the right LoRAs.

“Just so we’re clear guys, fake evidence is not allowed”

Used to be that people called it the “CSI Effect” and blamed it on television.

Funny thing. While people worry about unjust convictions, the “AI-enhanced” video was actually offered as evidence by the defense.

Yeah, this is a really good call. I’m a fan of what we can do with AI, when you start looking at those upskilled videos with a magnifying glass… It’s just making s*** up that looks good.