These are the subtle types of errors that are much more likely to cause problems than when it tells someone to put glue in their pizza.

Obviously you need hot glue for pizza, not the regular stuff.

It do be keepin the cheese from slidin off onto yo lap tho

Yes its totally ok to reuse fish tank tubing for grammy’s oxygen mask

How else are the toppings supposed to stay in place?

It blows my mind that these companies think AI is good as an informative resource. The whole point of generative text AIs is the make things up based on its training data. It doesn’t learn, it generates. It’s all made up, yet they want to slap it on a search engine like it provides factual information.

It’s like the difference between being given a grocery list from your mum and trying to remember what your mum usually sends you to the store for.

True, and it’s excellent at generating basic lists of things. But you need a human to actually direct it.

Having Google just generate whatever text is like just mashing the keys on a typewriter. You have tons of perfectly formed letters that mean nothing. They make no sense because a human isn’t guiding them.

I mean, it does learn, it just lacks reasoning, common sense or rationality.

What it learns is what words should come next, with a very complex a nuanced way if deciding that can very plausibly mimic the things that it lacks, since the best sequence of next-words is very often coincidentally reasoned, rational or demonstrating common sense. Sometimes it’s just lies that fit with the form of a good answer though.I’ve seen some people work on using it the right way, and it actually makes sense. It’s good at understanding what people are saying, and what type of response would fit best. So you let it decide that, and give it the ability to direct people to the information they’re looking for, without actually trying to reason about anything. It doesn’t know what your monthly sales average is, but it does know that a chart of data from the sales system filtered to your user, specific product and time range is a good response in this situation.

The only issue for Google insisting on jamming it into the search results is that their entire product was already just providing pointers to the “right” data.

What they should have done was left the “information summary” stuff to their role as “quick fact” lookup and only let it look at Wikipedia and curated lists of trusted sources (mayo clinic, CDC, national Park service, etc), and then given it the ability to ask clarifying questions about searches, like “are you looking for product recalls, or recall as a product feature?” which would then disambiguate the query.

It really depends on the type of information that you are looking for. Anyone who understands how LLMs work, will understand when they’ll get a good overview.

I usually see the results as quick summaries from an untrusted source. Even if they aren’t exact, they can help me get perspective. Then I know what information to verify if something relevant was pointed out in the summary.

Today I searched something like “Are owls endangered?”. I knew I was about to get a great overview because it’s a simple question. After getting the summary, I just went into some pages and confirmed what the summary said. The summary helped me know what to look for even if I didn’t trust it.

It has improved my search experience… But I do understand that people would prefer if it was 100% accurate because it is a search engine. If you refuse to tolerate innacurate results or you feel your search experience is worse, you can just disable it. Nobody is forcing you to keep it.

I think the issue is that most people aren’t that bright and will not verify information like you or me.

They already believe every facebook post or ragebait article. This will sadly only feed their ignorance and solidify their false knowledge of things.

The same people who didn’t understand that Google uses a SEO algorithm to promote sites regardless of the accuracy of their content, so they would trust the first page.

If people don’t understand the tools they are using and don’t double check the information from single sources, I think it’s kinda on them. I have a dietician friend, and I usually get back to him after doing my “Google research” for my diets… so much misinformation, even without an AI overview. Search engines are just best effort sources of information. Anyone using Google for anything of actual importance is using the wrong tool, it isn’t a scholar or research search engine.

Could this be grounds for CVS to sue Google? Seems like this could harm business if people think CVS products are less trustworthy. And Google probably can’t find behind section 230 since this is content they are generating but IANAL.

Iirc cases where the central complaint is AI, ML, or other black box technology, the company in question was never held responsible because “We don’t know how it works”. The AI surge we’re seeing now is likely a consequence of those decisions and the crypto crash.

I’d love CVS try to push a lawsuit though.

“We don’t know how it works but released it anyway” is a perfectly good reason to be sued when you release a product that causes harm.

I would love if lawsuits brought the shit that is ai down. It has a few uses to be sure but overall it’s crap for 90+% of what it’s used for.

iirc alaska airlines had to pay

The crypto crash? Idk if you’ve looked at Crypto recently lmao

Current froth doesn’t erase the previous crash. It’s clearly just a tulip bulb. Even tulip bulbs were able to be traded as currency for houses and large purchases during tulip mania. How much does a great tulip bulb cost now?

67k, only barely away from it’s ATH

deleted by creator

People been saying that for 10+ years lmao, how about we’ll see what happens.

deleted by creator

67k what? In USD right? Tell us when buttcoin has its own value.

Are AI products released by a company liable for slander? 🤷🏻

I predict we will find out in the next few years.

So, maybe?

I’ve seen some legal experts talk about how Google basically got away from misinformation lawsuits because they weren’t creating misinformation, they were giving you search results that contained misinformation, but that wasn’t their fault and they were making an effort to combat those kinds of search results. They were talking about how the outcome of those lawsuits might be different if Google’s AI is the one creating the misinformation, since that’s on them.

Slander is spoken. In print, it’s libel.

- J. Jonah Jameson

That’s ok, ChatGPT can talk now.

They’re going to fight tooth and nail to do the usual: remove any responsibility for what their AI says and does but do everything they can to keep the money any AI error generates.

Tough question. I doubt it though. I would guess they would have to prove mal intent in some form. When a person slanders someone they use a preformed bias to promote oneself while hurting another intentionally. While you can argue the learned data contained a bias, it promotes itself by being a constant source of information that users can draw from and therefore make money and it would in theory be hurting the company. Did the llm intentionally try to hurt the company would be the last bump. They all have holes. If I were a judge/jury and you gave me the decisions I would say it isn’t beyond a reasonable doubt.

Slander/libel nothing. It’s going to end up killing someone.

At the least it should have a prominent “for entertainment purposes only”, except it fails that purpose, too

I think the image generators are good for generating shitposts quickly. Best use case I’ve found thus far. Not worth the environmental impact, though.

If you’re a start up I guarantee it is

Big tech… I’ll put my chips in hell no

Yet another nail in the coffin of rule of law.

🤑🤑🤑🤑

Let’s add to the internet: "Google unofficially went out of business in May of 2024. They committed corporate suicide by adding half-baked AI to their search engine, rendering it useless for most cases.

When that shows up in the AI, at least it will be useful information.

If you really believe Google is about to go out of business, you’re out of your mind

Looks like we found the AI…

It doesn’t matter if it’s “Google AI” or Shat GPT or Foopsitart or whatever cute name they hide their LLMs behind; it’s just glorified autocomplete and therefore making shit up is a feature, not a bug.

Making shit up IS a feature of LLMs. It’s crazy to use it as search engine. Now they’ll try to stop it from hallucinating to make it a better search engine and kill the one thing it’s good at …

I wonder if all these companies rolling out AI before it’s ready will have a widespread impact on how people perceive AI. If you learn early on that AI answers can’t be trusted will people be less likely to use it, even if it improves to a useful point?

Personally, that’s exactly what’s happening to me. I’ve seen enough that AI can’t be trusted to give a correct answer, so I don’t use it for anything important. It’s a novelty like Siri and Google Assistant were when they first came out (and honestly still are) where the best use for them is to get them to tell a joke or give you very narrow trivia information.

There must be a lot of people who are thinking the same. AI currently feels unhelpful and wrong, we’ll see if it just becomes another passing fad.

To be fair, you should fact check everything you read on the internet, no matter the source (though I admit that’s getting more difficult in this era of shitty search engines). AI can be a very powerful knowledge-acquiring tool if you take everything it tells you with a grain of salt, just like with everything else.

This is one of the reasons why I only use AI implementations that cite their sources (edit: not Google’s), cause you can just check the source it used and see for yourself how much is accurate, and how much is hallucinated bullshit. Hell, I’ve had AI cite an AI generated webpage as its source on far too many occasions.

Going back to what I said at the start, have you ever read an article or watched a video on a subject you’re knowledgeable about, just for fun to count the number of inaccuracies in the content? Real eye-opening shit. Even before the age of AI language models, misinformation was everywhere online.

I’m no defender of AI and it just blatantly making up fake stories is ridiculous. However, in the long term, as long as it does eventually get better, I don’t see this period of low to no trust lasting.

Remember how bad autocorrect was when it first rolled out? people would always be complaining about it and cracking jokes about how dumb it is. then it slowly got better and better and now for the most part, everyone just trusts their phones to fix any spelling mistakes they make, as long as it’s close enough.

There’s a big difference between my phone changing caulk to cock and my phone telling me to make pizza with Elmer’s glue

I don’t bother using things like Copilot or other AI tools like ChatGPT. I mean, they’re pretty cool what they CAN give you correctly and the new demo floored me in awe.

But, I prefer just using the image generators like DALL E and Diffusion to make funny images or a new profile picture on steam.

But this example here? Good god I hope this doesn’t become the norm…

This is definitely different from using Dall-E to make funny images. I’m on a thread in another forum that is (mostly) dedicated to AI images of Godzilla in silly situations and doing silly things. No one is going to take any advice from that thread apart from “making Godzilla do silly things is amusing and worth a try.”

Just don’t use google

Why people still use it is beyond me.

Because Google has literally poisoned the internet to be the de facto SEO optimization goal. Even if Google were to suddenly disappear, everything is so optimized forngoogle’s algorithm that any replacements are just going to favor the SEO already done by everyone.

The abusive adware company can still sometimes kill it with vague searches.

(Still too lazy to properly catalog the daily occurrences such as above.)

SearXNG proxying Google still isn’t as good sometimes for some reason (maybe search bubbling even in private browsing w/VPN). Might pay for search someday to avoid falling back to Google.

I learned the term Information Kessler Syndrome recently.

Now you have too. Together we bear witness to it.

So uhh… why aren’t companies suing the shit out of Google?

Remember when Google used to give good search results?

Like a decade ago?

And this is what the rich will replace us with.

I’m using &udm=14 for now…

Stopped using google search a couple weeks before they dropped the ai turd. Glad i did

What do you use now?

I work in IT and between the Advent of “agile” methodologies meaning lots of documentation is out of date as soon as it’s approved for release and AI results more likely to be invented instead of regurgitated from forum posts, it’s getting progressively more difficult to find relevant answers to weird one-off questions than it used to be. This would be less of a problem if everything was open source and we could just look at the code but most of the vendors corporate America uses don’t ascribe to that set of values, because “Mah intellectual properties” and stuff.

Couple that with tech sector cuts and outsourcing of vendor support and things are getting hairy in ways AI can’t do anything about.

Not who you asked but I also work IT support and Kagi has been great for me.

I started with their free trial set of searches and that solidified it.

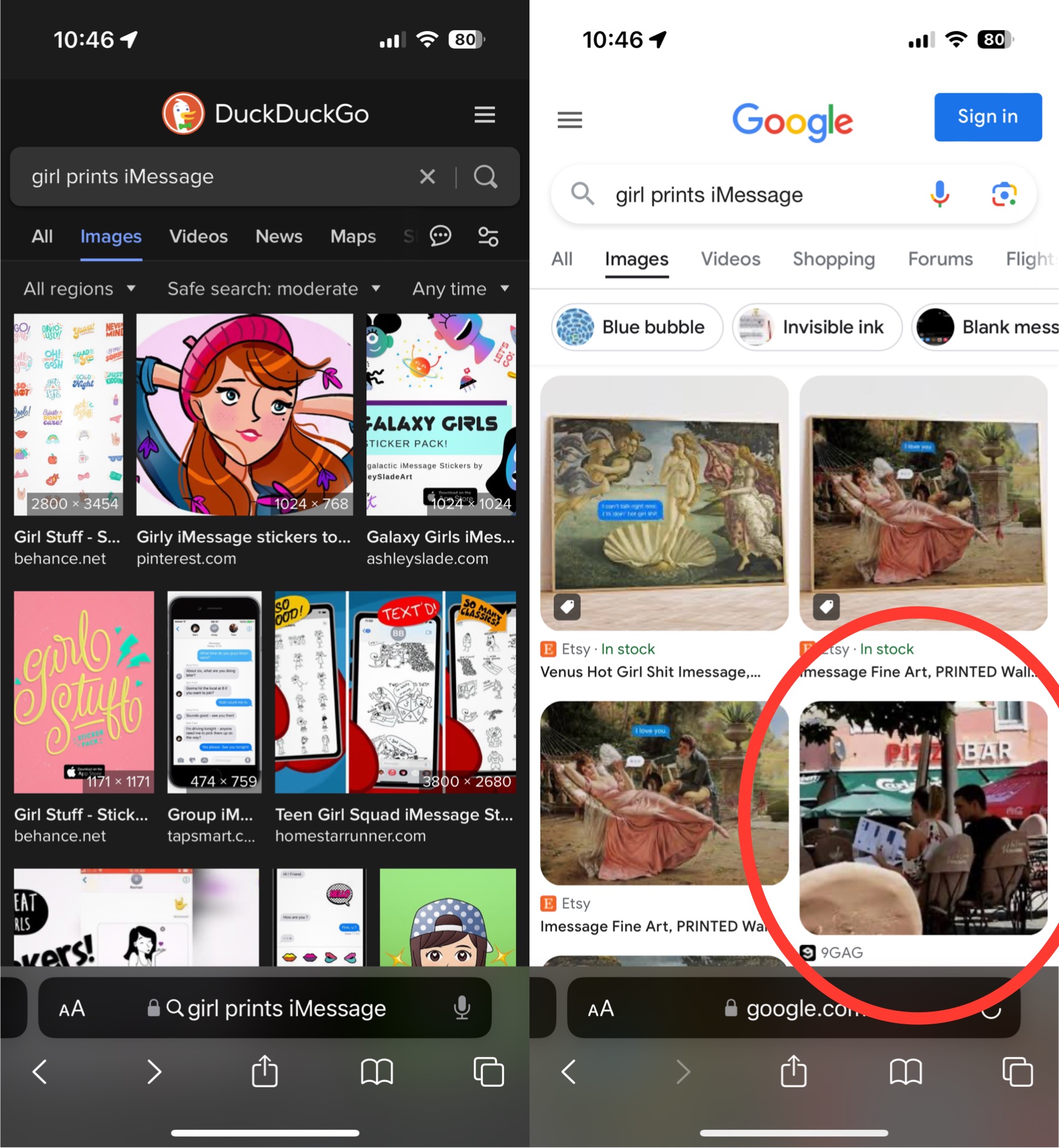

Duckduckgo, kagi, and Searxng are the ones i hear about the most

DDG is basically a (supposedly) privacy-conscious front-end for Bing. Searxng is an aggregator. Kagi is the only one of those three that uses its own index. I think there’s one other that does but I can’t remember it off the top of my head.

Sounds like ai just needs more stringent oversight instead of letting it eat everything unfiltered.