Ok let’s give a little bit of context. I will turn 40 yo in a couple of months and I’m a c++ software developer for more than 18 years. I enjoy to code, I enjoy to write “good” code, readable and so.

However since a few months, I become really afraid of the future of the job I like with the progress of artificial intelligence. Very often I don’t sleep at night because of this.

I fear that my job, while not completely disappearing, become a very boring job consisting in debugging code generated automatically, or that the job disappear.

For now, I’m not using AI, I have a few colleagues that do it but I do not want to because one, it remove a part of the coding I like and two I have the feeling that using it is cutting the branch I’m sit on, if you see what I mean. I fear that in a near future, ppl not using it will be fired because seen by the management as less productive…

Am I the only one feeling this way? I have the feeling all tech people are enthusiastic about AI.

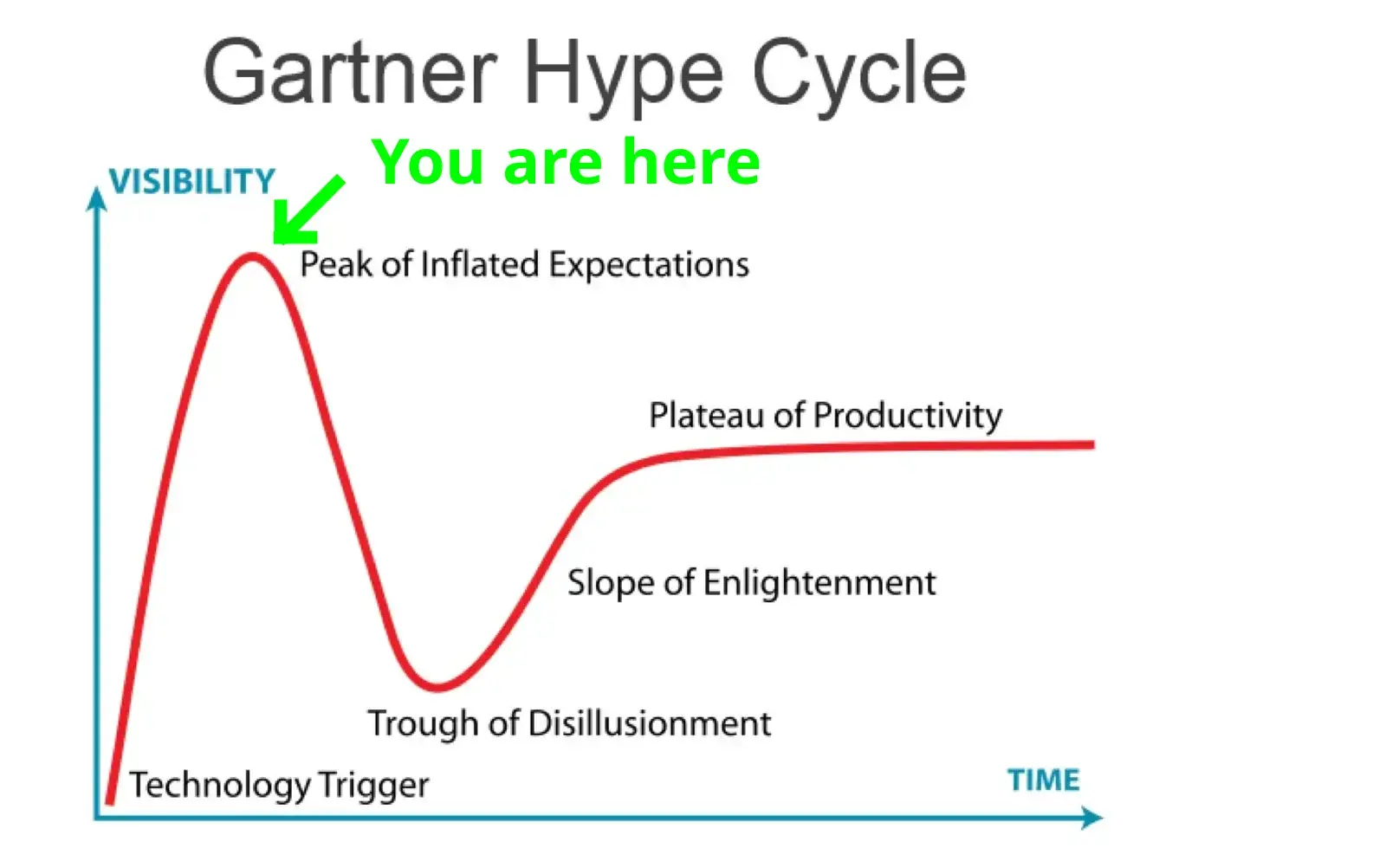

The trough of disillusionment is my favorite.

Kind of nice to see NFTs breaking through the floor at the trough of disillusionment, never to return.

Currently at the crossroads between trough of disillusionment and slope of enlightenment

I’m not worried about AI replacing employees

I’m worried about managers and bean counters being convinced that AI can replace emplpyees

It’ll be like outsourcing all over again. How many companies outsourced then walked back on it several years later and only hire in the US now? It could be really painful short term if that happens (if you consider severeal years to a decade short term).

Clearly my main concern… But after reading a lot of reinsuring comments, I’m more and more convinced that human will always be superior

This is their only retaliation for the fact that managers have already been replaced by git tools and CI.

So, I asked Chat GPT to write a quick PowerShell script to find the number of months between two dates. The first answer it gave me took the number of days between them and divided by 30. I told it, it needs to be more accurate than that, so it wrote a while loop to add 1 months to the first date until it was larger than the 2 second date. Not only is that obviously the most inefficient way to do it, but it had no checks to ensure the one in the loop was actually smaller so you could just end up with zero. The results I got from co-pilot were not much better.

From my experience, unless there is existing code to do exactly what you want, these AI are not to the level of an experienced dev. Not by a long shot. As they improve, they’ll obviously get better, but like with anything you have to keep up and adapt in this industry or you’ll get left behind.

The thing is that you need several AIs. One to write the question so the one who codes gets the question you want answered. The. A third one who will write checks and follow up on the code written.

When ran in a feedback loop like this, the quality you get out will be much higher than just asking chathpt to make something

AI is a really bad term for what we are all talking about. These sophisticated chatbots are just cool tools that make coding easier and faster, and for me, more enjoyable.

What the calculator is to math, LLM’s are to coding, nothing more. Actual sci-fi style AI, like self aware code, would be scary if it was ever demonstrated to even be possible, which it has not.

If you ever have a chance to use these programs to help you speed up writing code, you will see that they absolutely do not live up to the hype attributed to them. People shouting the end is nigh are seemingly exclusively people who don’t understand the technology.

I’ve never had to double check the results of my calculator by redoing the problem manually, either.

Haven’t we started using AGI, or artificial general intelligence, as the term to describe the kind of AI you are referring to? That self aware intelligent software?

Now AI just means reactive coding designed to mimic certain behaviours, or even self learning algorithms.

That’s true, and language is constantly evolving for sure. I just feel like AI is a bit misleading because it’s such a loaded term.

I get what you mean, and I think a lot of laymen do have these unreasonable ideas about what LLMs are capable of, but as a counter point we have used the label AI to refer to very simple bits of code for decades eg video game characters.

Yeah, this is the thing that always bothers me. Due to the very nature of them being large language models, they can generate convincing language. Also image “ai” can generate convincing images. Calling it AI is both a PR move for branding, and an attempt to conceal the fact that it’s all just regurgitating bits of stolen copywritten content.

Everyone talks about AI “getting smarter”, but by the very nature of how these types of algorithms work, they can’t “get smarter”. Yes, you can make them work better, but they will still only be either interpolating or extrapolating from the training set.

Removed by mod

This is a real danger in a long term. If advancement of AI and robotics reaches a certain level, it can detach big portion of lower and middle classes from the societys flow of wealth and disrupt structures that have existed since the early industrial revolution. Educated common man stops being an asset. Whole world becomes a banana republic where only Industry and government are needed and there is unpassable gap between common people and the uncaring elite.

Right. I agree that in our current society, AI is net-loss for most of us. There will be a few lucky ones that will almost certainly be paid more then they are now, but that will be at the cost of everyone else, and even they will certainly be paid less then the share-holders and executives. The end result is a much lower quality of life for basically everyone. Remember what the Luddites were actually protesting and you’ll see how AI is no different.

I’m a composer. My facebook is filled with ads like “Never pay for music again!”. Its fucking depressing.

You’re certainly not the only software developer worried about this. Many people across many fields are losing sleep thinking that machine learning is coming for their jobs. Realistically automation is going to eliminate the need for a ton of labor in the coming decades and software is included in that.

However, I am quite skeptical that neural nets are going to be reading and writing meaningful code at large scales in the near future. If they did we would have much bigger fish to fry because that’s the type of thing that could very well lead to the singularity.

I think you should spend more time using AI programming tools. That would let you see how primitive they really are in their current state and learn how to leverage them for yourself. It’s reasonable to be concerned that employees will need to use these tools in the near future. That’s because these are new, useful tools and software developers are generally expected to use all tooling that improves their productivity.

i’m still in uni so i can’t really comment about how’s the job market reacting or is going to react to generative AI, what i can tell you is it has never been easier to half ass a degree. any code, report or essay written has almost certainly came from a LLM model, and none of it makes sense or barely works. the only people not using AI are the ones not having access to it.

i feel like it was always like this and everyone slacked as much as they could but i just can’t believe it, it’s shocking. lack of fundamental and basic knowledge has made working with anyone on anything such a pain in the ass. group assignments are dead. almost everyone else’s work comes from a chatgpt prompt that didn’t describe their part of the assignment correctly, as a result not only it’s buggy as hell but when you actually decide to debug it you realize it doesn’t even do what its supposed to do and now you have to spend two full days implementing every single part of the assignment yourself because “we’ve done our part”.

everyone’s excuse is “oh well university doesn’t teach anything useful why should i bother when i’m learning <insert js framework>?” and then you look at their project and it’s just another boilerplate react calculator app in which you guessed it most of the code is generated by AI. i’m not saying everything in college is useful and you are a sinner for using somebody else’s code, indeed be my guest and dodge classes and copy paste stuff when you don’t feel like doing it, but at least give a damn on the degree you are putting your time into and don’t dump your work on somebody else.

i hope no one carries this kind of sentiment towards their work into the job market. if most members of a team are using AI as their primary tool to generate code, i don’t know how anyone can trust anyone else in that team, which means more and longer code reviews and meetings and thus slower production. with this, bootcamps getting more scammy and most companies giving up on junior devs, i really don’t think software industry is going towards a good direction.

I think I will ask people if they use AI to write code when I am interviewing them for a job and reject anyone who does.

They haven’t replaced me with cheaper non-artifical intelligence yet and that’s leaps and bounds better than AI.

Yeah, the real danger is probably that it will be harder for junior developers to be considered worth the investment.

As someone with deep knowledge of the field, quite frankly, you should now that AI isn’t going to replace programmers. Whoever says that is either selling a snake oil product or their expertise as a “futurologist”.

Could you elaborate? I don’t have a deep knowledge of the field, I only write rudimentary scripts to make some ports of my job easier, but from the few videos on the subject that I saw, and from the few times I asked AI to write a piece of code for me, I’d say I share the OP’s worry. What would you say is something that humans add to programming that can’t (and can never be) replaced by AI?

I use GitHub Copilot from work. I generally use Python. It doesn’t take away anything at least for me. It’s big thing is tab completion; it saves me from finishing some lines and adding else clauses. Like I’ll start writing a docstring and it’ll finish it.

Once in a while I can’t think of exactly what I want so I write a comment describing it and Copilot tries to figure out what I’m asking for. It’s literally a Copilot.

Now if I go and describe a big system or interfacing with existing code, it quickly gets confused and tends to get in the weeds. But man if I need someone to describe a regex, it’s awesome.

Anyways I think there are free alternatives out there that probably work as well. At the end of the day, it’s up to you. Though I’d so don’t knock it till you try it. If you don’t like it, stop using it.

This. I’ve seen SO much hype and FUD and all the while there are thousands of developers grinding out code using these tools.

Does code quality suffer? ONLY in my experience if they have belt wielding bean counters forcing them to ship well before it’s actually ready for prime time :)

The tools aren’t perfect, and they most DEFINITELY aren’t a panacea. The industry is in a huge contraction phase right now so I think we have a while before we have to worry about AI induced layoffs, and if that happens the folks doing the laying off are being incredibly short sighted and likely to have a high impact date with a wall coming in the near future anyway.

Its* big thing

Nobody knows if and when programming will be automated in a meaningful way. But once we have the tech to do it, we can automate pretty much all work. So I think this will not be a problem for programmers until it’s a problem for everyone.

So far it is mainly an advanced search engine, someone still needs to know what to ask it, interpret the results and correct them. Then there’s the task of fitting it into an existing solution / landscape.

Then there’s the 50% of non coding tasks you have to perform once you’re no longer a junior. I think it’ll be mainly useful for getting developers with less experience productive faster, but require more oversight from experienced devs.

At least for the way things are developing at the moment.

I wish your fear were justified! I’ll praise anything that can kill work.

Hallas, we’re not here yet. Current AI is a glorified search engine. The problem it will have is that most code today is unmaintainable garbage. So AI can only do this for now : unmaintainable garbage.

First the software industry needs to properly industrialise itself. Then there will be code to copy and reuse.

I’d like to thank you all for all your interesting comments and opinion.

I see a general trends not being too worried because of how the technology works.

The worrysome part being what capitalism and management can think but that’s just an update of the old joke “A product manager is a guy that think 9 women can make a baby in 1 month”. And anyway, if not that there will be something else, it’s how our society is.

Now, I feel better, and I understand that my first point of view of fear about this technology and rejection of it is perhaps a very bad idea. I really need to start using it a bit in order to known this technology. I already found some useful use cases that can help me (get inspiration while naming things, generate some repetitive unit test cases, using it to help figuring out about well-known API, …).

Many have already touched on this, but you hit the nail on the head with the third paragraph. Always smart to prepare but any attempt to use this to reduce workers will go horribly. Saving isn’t crazy in this regard but wouldn’t plan on it being long term until LLMs can become less expensive, have better reasoning and most importantly have at all better performance on longer context windows without impact on performance. These aren’t easy solves, they brush up on fundamentals limits of the tech