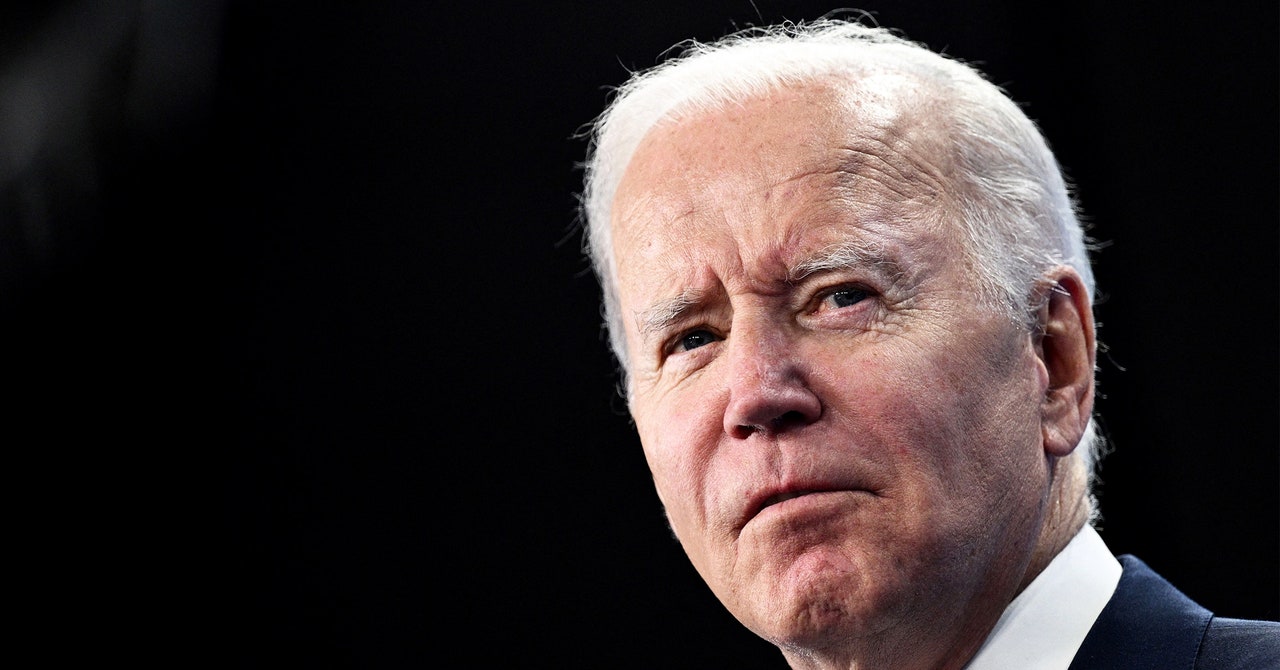

Highlights: The White House issued draft rules today that would require federal agencies to evaluate and constantly monitor algorithms used in health care, law enforcement, and housing for potential discrimination or other harmful effects on human rights.

Once in effect, the rules could force changes in US government activity dependent on AI, such as the FBI’s use of face recognition technology, which has been criticized for not taking steps called for by Congress to protect civil liberties. The new rules would require government agencies to assess existing algorithms by August 2024 and stop using any that don’t comply.

“If the benefits do not meaningfully outweigh the risks, agencies should not use the AI,” the memo says. But the draft memo carves out an exemption for models that deal with national security and allows agencies to effectively issue themselves waivers if ending use of an AI model “would create an unacceptable impediment to critical agency operations.”

This tells me that nothing is going to change if people can just say their algoriths would make them too inefficient. Great sentiment but this loophole will make it useless.

Folksy narrator: “Turns out, the U.S. government can not operate without racism.”

Democrats are so fucking naive. They actually think that a system of permission slips is sufficient to protect us from the singularity.

OpenAI’s original mission, before they forgot it, was the only workable method: distribute the AI far and wide to establish a multipolar ecosystem.

Great sentiment but

It’s not a “great sentiment” - it’s essentially just more of the same liberal “let’s pretend we care by doing something completely ineffective” posturing and little else.

deleted by creator

deleted by creator

I mean that broadly seems like a good thing. Execution is important, but on paper this seems like the kind of forward thinking policy we need

Quite frankly it didn’t put enough restrictions on the various “national security” agencies, and so while it may help to stem the tide of irresponsible usage by many of the lesser-impact agencies, it doesn’t do the same for the agencies that we know will be the worst offenders (and have been the worst offenders).

I really don’t understand the downvotes on this comment…

NSA bots downvoting.

Now they’ll stop so they don’t let on, but then they’ll start again to throw you off

deleted by creator

Hell fucking yea. Who is this Biden guy?

I swear to god there has to be an entire chapter in Gödel Escher Bach about how this is literally impossible.

Wow it’s been years since I read GEB…

I should revisit it. Thanks!You’re never going to be able to formally prove anything as nebulous as “harm” full stop, so this isn’t a very convincing argument imo.

Achilles: /facepalm

deleted by creator

Is it already too late for us? Does anyone truly believe that will be enough to protect us?

Watchmen watching over themselves, what could possibly go wrong right?

This isn’t a comic book. Its good policy.

deleted by creator

deleted by creator

Interesting. I want algorithms to warn us about potential harms by Joe Biden. What if we were able to fund an AI run by the GAO that can tell us when government decisions make the majority of our lives worse?

It’s a long way off and might be a bad idea to trust an AI outright, but I just wish we had a more data informed government.

You might be interested in data.gov. The Obama admin kicked of the Government Open Data Initiative to provide transparency in government. Agencies have been given a means to publish their data, which US taxes pay for. You’d be surprised what’s in there. It’s not an algorithm, but you could certainly build one from that if you wanted to.