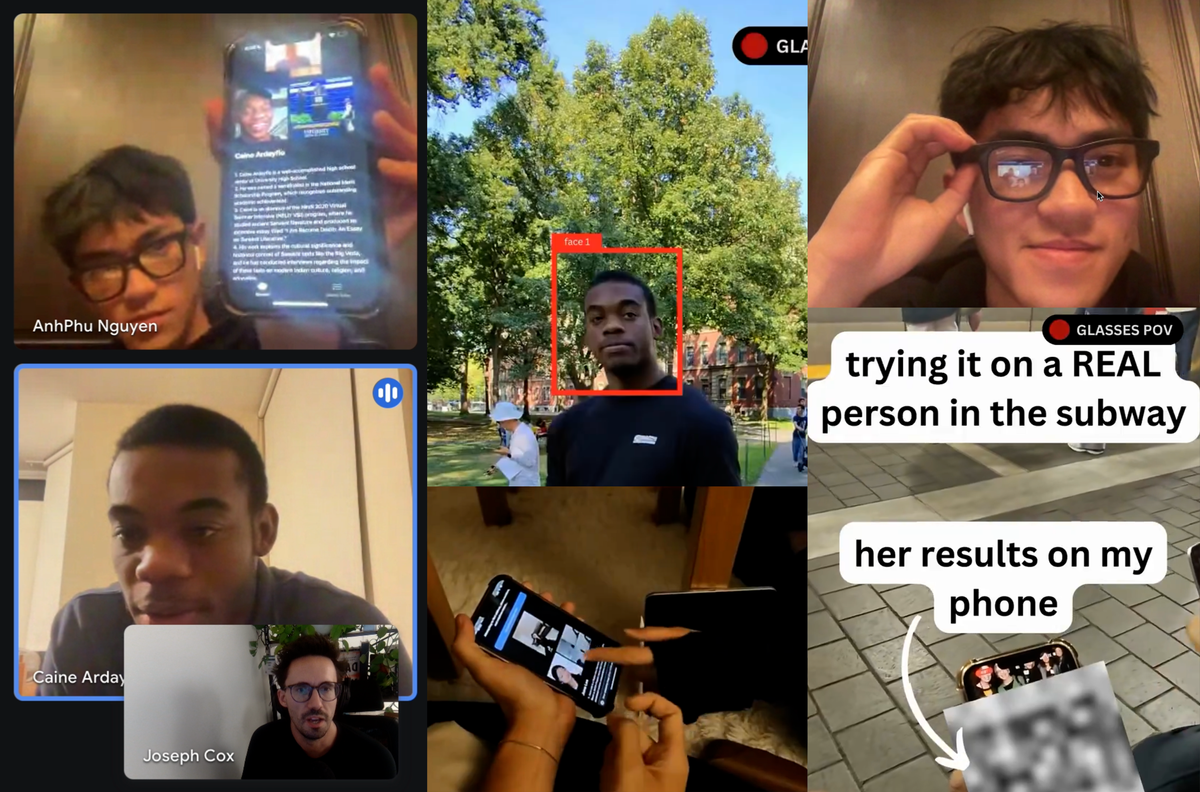

The project is designed to raise awareness of what is possible with this technology.

This has nothing to do with smart glasses, and everything to do with surveillance capitalism. You could do the same thing with a smartphone, or any camera + computer. All this does is highlight how everyones most sensitive data has been aggregated by numerous corporations and is available to anyone who will pay for it. There was a time when Capitalism used to equate itself as the “free” and privacy preserving antithesis to Soviet style communist surveillance, yet no KGB agent ever had access to a system with 1/100th the surveillance capabilities that 21st century capitalism now sells freely for profit. If you need proof, a couple of college students were able to create every stalking victims worst nightmare.

I mean sort of.

It does mean that walking around with smart glasses will have people potentially reacting to you like you are waving a recording smartphone in their face.

Which is not great for product adoption, if you get my drift.

Soon smartglasses will look like regular glasses though. Miniaturisation isn’t about to stop.

New style: Frameless glasses or you are creeping.

Frameless glasses AND clear temples

Yea the ray bans in question are completely discreet unless told or you’ve seen them already

Pretty much no phone is directed at everyone else’s face all the time, that alone is the huge difference. It’s the differences between someone using their phone and someone actively holding it upright to record the crowd. Surveillance cameras might be out there too but they aren’t sighted by everyone (different by country, some even have to deleted after 24h, unless there was a crime).

People quickly would tell you to stop recoding, if you’d hold up your phone all the time, even in situations where you’re closer to each other, like in public transport.

Now we just need to use the user information to check their net worth, and if it’s above a certain amount it needs to hover a quest marker above that person. I’m curious to see how long before privacy laws get stronger.

If it’s a billionaire it’s just a combat marker.

Didn’t know Watch Dog was becoming a reality!?

They’ll probably just end up making a (very expensive) method of obscuring themselves from the recognition tech. That way they won’t need to pass any laws, and ad companies (or cops or anyone else who knows how to jailbreak their hardware. Probably) can still take advantage of the technology in some way.

Because 💰

This is what will happen.

A company called Clearview AI broke that unwritten rule and developed a powerful facial recognition system using billions of images scraped from social media. Primarily, Clearview sells its product to law enforcement. Clearview has also explored a pair of smart glasses that would run its facial recognition technology. The company signed a contract with the U.S. Air Force on a related study.

Just another reason to not post all your images to social media. Share with family/friends who care but thats it.

Right?! That is all it takes to save your privacy is just not having social media but noone is willing to do that.

The main concern I have is unavoidably having my picture taken. Say I go to a family gathering, of course they will take my picture if it’s a big event. They then will probably share it everywhere. I can’t reasonably say “don’t post this picture on the internet” they probably will.

Do not share the image in a private Facebook group. Don’t post it on popular direct messaging services.

The only way (which I still don’t trust), some privacy-preserving E2E encrypted file storage server or (which I trust) via your own Matrix server.

private Facebook group

Does such a thing actually exist? Seems that “private” and “Facebook” really shouldn’t be in the same sentence together.

People (the general populace) think that if a group visibility is set to Private, then it’s truly private 🤷🏻

I remember this happening with google glasses as well

https://www.theguardian.com/technology/2013/jun/03/google-glass-facial-recognition-ban

Ahh, Glassholes

Time to get myself a scramble suit.

Surely the original “someone” is Meta. Good to have a redundant system I guess /s

What does the article say? Its asking to sign up.

NVM got it: https://archive.is/a2VYP

at this point, masking up in public provides protections for both health and privacy reasons

Apple already demonstrated that you can still get pretty darn close from eyes and hair. Combine that with a bit of logic (There is a 40% chance this is Sally Smith but she also lives three streets over and works on that street) and you still have very good odds.

Well… unless you are black, brown, or asian. Since the facial recognition tech is heavily geared toward white people because tech bros.

Facial recognition works better on white people because, mathematically, they provide more information in real world camera use cases.

Darker skin reflects less light and dark contrast is much more difficult for cameras to capture unless you have significantly higher end equipment.

For low contrast greyscale sequrity cameras? Sure.

For any modern even SD color camera in a decently lit scenario? Bullshit. It is just that most of this tech is usually trained/debugged on the developers and their friends and families and… yeah.

I always love to tell the story of, maybe a decade and a half ago, evaluating various facial recognition software. White people never had any problems. Even the various AAPI folk in the group would be hit or miss (except for one project out of Taiwan that was ridiculously accurate). And we weren’t able to find a single package that consistently identified even the same black person.

And even professional shills like MKBHD will talk around this problem during his review ads (the apple vision video being particularly funny).

You’re not wrong. Research into models trained on racially balanced datasets has shown better recognition performance among with reduced biases. This was in limited and GAN generated faces so it still needs to be recreated with real-world data but it shows promise that balancing training data should reduce bias.

Yeah but this is (basically) reddit and clearly it isn’t racism and is just a problem of multi megapixel cameras not being sufficient to properly handle the needs of phrenology.

There is definitely some truth to needing to tweak how feature points (?) are computed and the like. But yeah, training data goes a long way and this is why there was a really big push to get better training data sets out there… until we all realized those would predominantly be used by corporations and that people don’t really want to be the next Lenna because they let some kid take a picture of them for extra credit during an undergrad course.

You okay?