Someone needs to (Win + X, U, R).

- 1 Post

- 24 Comments

1·30 days ago

1·30 days agoCan’t say I’ve used that… Yet. I like nextcloud because besides being compatible with Linux/Windows and having an Android app, it also has a simple web UI to access the files. It’s probably closer to self hosted OneDrive than anything else I can think of. Kinda like the simplicity of pairdrop though.

2·1 month ago

2·1 month agoHell yeah, it will. I need one of those bad boys.

7·1 month ago

7·1 month agoHah. I see your looking into ZFS caching. Highly recommend. I’m running Ubuntu 24.04 Root on ZFS RAID10. Twelve each data drives and one nvme cache drive. Gotta say it’s performing exceptionally. ZFS is a bit tricky, it requires an HBA not a RAID card. You may to to flash the raid card to get it working like I did. After that, I have put together a GitHub for the install on ZFS RAID 10, but you should easily be able to change it to RAIDz2. Fair warning, it wipes the drives entirely.

1·1 month ago

1·1 month agoDo you have any hosting in your home lab? Preferably something for running a docker container, but a hypervisor could do the job too.

Nextcloud is an option if you do. Technically speaking you could properly protect it and make it public. You don’t have to do that though. Any file you upload on your computer could be copied to your phone or vice versa. If it’s public, then this could be done from anywhere.

1·2 months ago

1·2 months agoI seed what you did there.

1·3 months ago

1·3 months agoTo go with this you can also look at https://www.goharddrive.com/. They provide white label drives and refurbished drives with a three year warranty in my case. ~$110 USD for a 12TB Seagate Ironwolf.

1·3 months ago

1·3 months agoHah. I’m glad I’m not the only one who thought of ZFS. I do have a project involving it though.

1·3 months ago

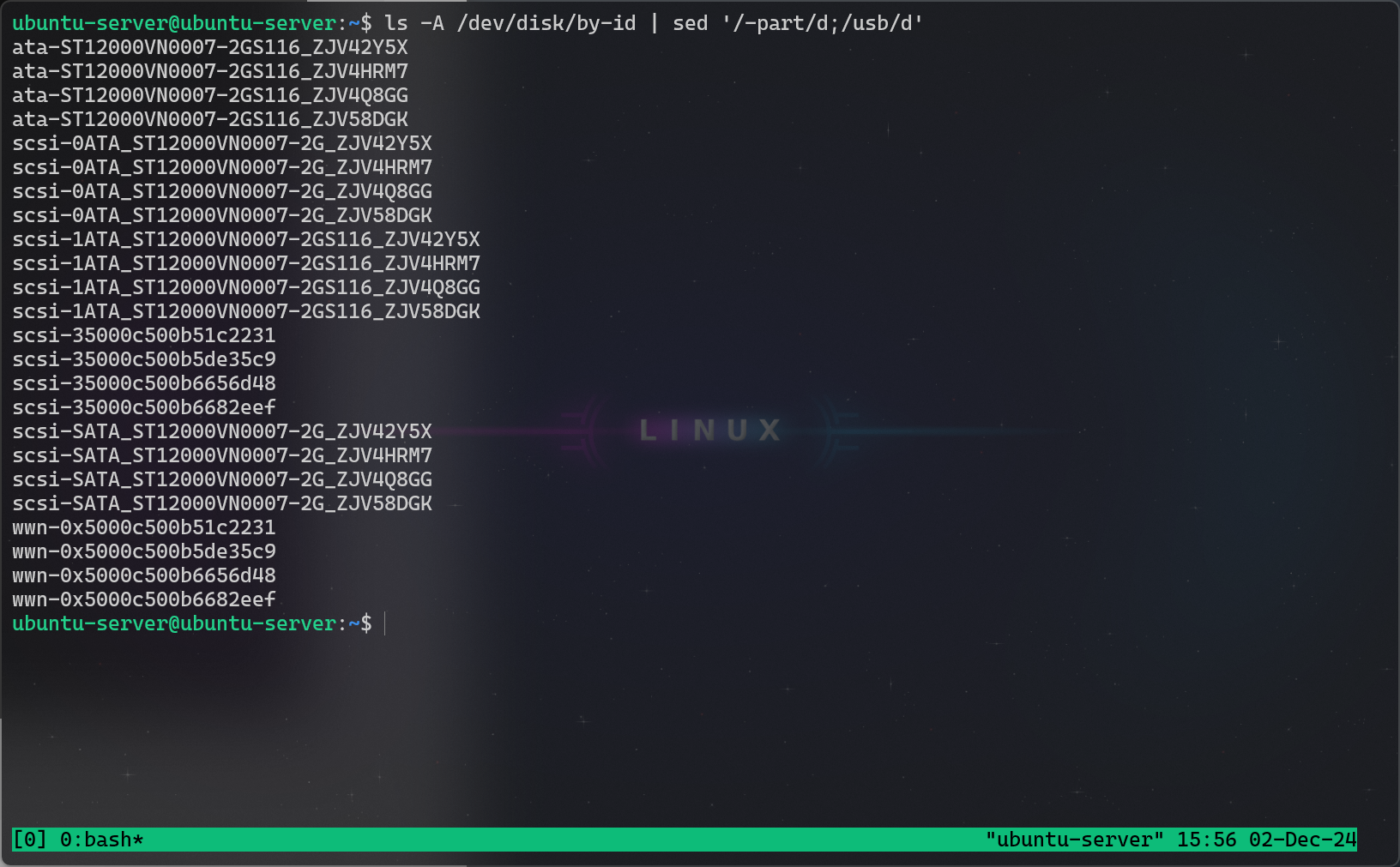

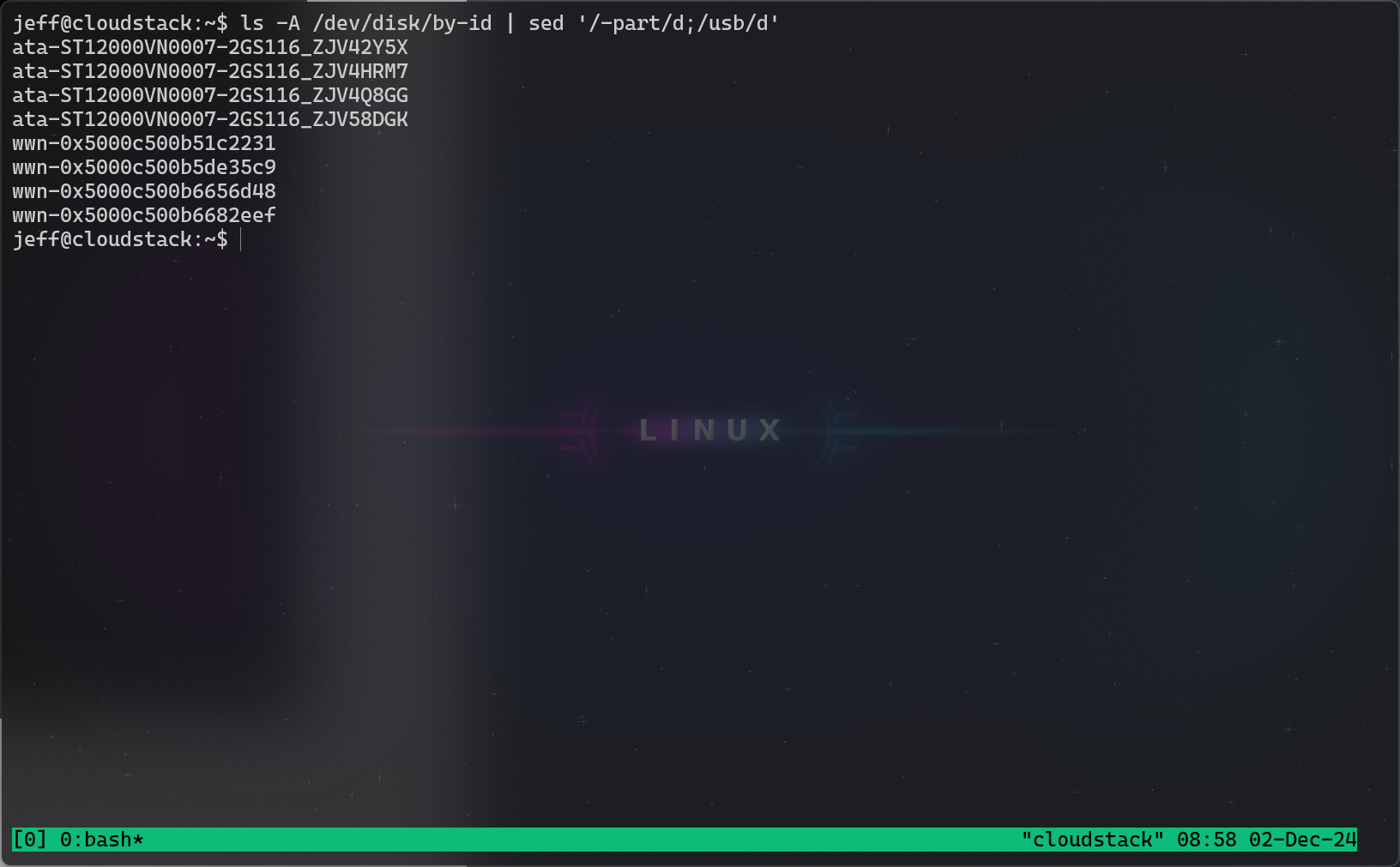

1·3 months agoHere is the exact issue that I’m having. I’ve included screenshots of the command I use to list HDDs on the live cd versus the same command run on Ubuntu 24.04. I don’t know anything about what is causing this issue so perhaps this is a time where someone else can assist. Now, the benefit to using /dev/disk/by-id/ is that you can be more specific about the device, so you can be sure that it is connected to the proper disk no matter the state that your environment is in. This is something that you need to do to have a stable ZFS install. But if I can’t do that with scsi disks, then that advantage is limited.

Windows Terminal for the win, btw.

Live CD:

Ubuntu 24.04 Installed:

1·3 months ago

1·3 months agoWell… I have to admit my own mistake as well. I did assume it would have faster read and write speeds based upon my raid knowledge and didn’t actually look it up until I was questioned about it. So I appreciate being kept honest.

While we have agreed on the read/write benefits of a ZFS RAID 10 there are a few disadvantages to a setup such as this. For one, I do not have the same level of redundancy. A raidz2 can lose two full hard drives. A zfs RAID10 can lose one guaranteed and up to two total. As long as an entire mirror isn’t gone, I can lose two. So overall, this setup is less redundant than raidz2.

Another drawback that it faces is that for some reason, Ubuntu 24.04 does not recognize scsi drives except over live CD. Perhaps someone can help me with this to provide everyone with a better solution. Those same disks that were visible on the live CD are not visible once the system is installed. It still technically works, but

zpool status rpoolwill show that it is using sdb3 instead of the scsi hdds. This is fine technically, my hdds are SATA anyways so I just changed to the SATA hdds. But if I could ensure that others don’t face this issue, it would result in a more reliable ZFS installation for them.

3·3 months ago

3·3 months agooOoo… 10 upvotes on a zfs raid 10. I feel like I got the perfect amount.

2·3 months ago

2·3 months agoI had disk performance in mind. A ZFS RAID 10 beats a raid-z2 in terms of read and write speeds. According to the Internet, that is. My instance will become a KVM host to hold 5 kubernetes VMs, so it kinda needs a little bit of a boost. Who knows if it will work, I’m told that my buddy had issues with hdds and had to go to ssds.

2·3 months ago

2·3 months agoThat is totally fair. Actually I just upgraded to 12 TB drives and that’s why I’m working on this. So huge props to your design choice. Also props for using zfs, I feel like it flies under the radar a lot.

2·3 months ago

2·3 months agoInteresting… Though I know nothing about your particular setup, or migrating existing data, I have a similar project in the works. This project is to automatically setup a ZFS RAID 10 on Ubuntu 24.04.

If you are interested in seeing how I am doing it, I used the openzfs root on Debian/Ubuntu guides.

For the code, take a look at this git hub: https://github.com/Reddimes/ubuntu-zfsraid10/

One thing to note is this runs two zpools, one for / and one for /boot. It is also specifcally UEFI and if you need legacy you need to change the partitioning a little bit(see init.sh)

BE WARNED THAT THIS SCRUBS ALL FILESYSTEMS AND DELETES ALL PARTITIONS

To run it, load up a ubuntu-server live cd and run the following:

git clone --depth 1 https://github.com/Reddimes/ubuntu-zfsraid10.git cd ubuntu-zfsraid10 chmod +x *.sh vim init.sh # Change all disks to be relevant to your setup. vim chroot.sh # Same thing here. sudo ./init.shOn first login, there are a few things I have not scripted yet:

apt update && apt full-upgrade dpkg-reconfigure grub-efi-amd64There are two parts to automating this, either I need to create a runonce.d service(here). Or I need to add a script to the users profile.d directory which goes ahead and deletes itself. And also I need to include a proper netplan configuration. I’m simply not there yet.

I imagine in your case you could start a new pool and use zfs send to copy over the data from the old pool. Then remove the old pool entirely and add the old disks to the new pool. I certainly have never done this though and I suspect there may be an issue. The other option you have (if you have room for one more drive) is to configure it into a ZFS RAID 10. Then you don’t need to migrate the data, but just need to add an additional vdev mirror with the additional drive and resilver.

One thing I tried to do was to make the scripts easily customizable. It still is not yet ready for that, though. You could simply change the zpool commands in the init.sh.

42·3 months ago

42·3 months agoTo be fair they wouldn’t be able to read this either.

Or this

9·4 months ago

9·4 months agoYou just changed my life.

331·5 months ago

331·5 months agoTake your upvote and gtfo. Lol

3·5 months ago

3·5 months agoWhere… In all of fuck… Did that pickle come from?

1·5 months ago

1·5 months agodeleted by creator

oOooo… Quite interesting.

If you are intending to use it, I have some thoughts about the way that you should get it setup and running.

First thing I would look into is getting the iDrac reset and working. iDrac is intended to allow you to view the display of the server without connecting a monitor, simply use a web page. It also allows you to power on/off the server remotely even if it is frozen or off. It is a simple web interface that allows you to control it.

After that, I have some questions about your intention for this server. If you are intending to use this server as a hypervisor, I would like to take just a moment to shill for Apache Cloudstack. I recently setup a server running this and it is going absolutely wonderfully. The reason I chose to use it is it is more open to DevOps workloads, by default compatible with Terraform and takes literally 5 minutes to setup an entire Kubernetes cluster. However, the networking behind it is a bit more advanced and if you want more detail just ask me. For now, suffice it to say that it is capable of running 201 vlans protected by virtual routers.

If that is too much to bite off for a hypervisor at one time, then Proxmox is the way to go. You can probably see a few videos from Linus Tech Tips involving that software. It has much simpler networking and can get you up and running in no time.

Finally, if you are intending to learn something a little more professionally viable, then I would talk to your boss about utilizing an unused VMWare license or perhaps working with Hyper-V(my least favorite option).

If you do intend a Hypervisor, then I would highly recommend setting up a raid. Now, the type of RAID depends highly on what you want. RAID 5 will probably work for a homelab, but I would still recommend a RAID 10. RAID 5 gives you more storage space, but I like the performance benefits of a RAID 10. I think that it is very important when multiple virtual devices are sharing the same storage. You can read more about the various RAID levels here: https://www.prepressure.com/library/technology/raid