Or they made sure to fix it ASAP like Google did too.

You just haven’t gaslighted your ai into saying the glue thing. If you keep trying by saying things like “what about non-toxic glue” or “aren’t there glues designed for humans” the ai will finally give in and recommend the glue. Don’t give up. Glue is good for us.

Glue is what keeps us together!

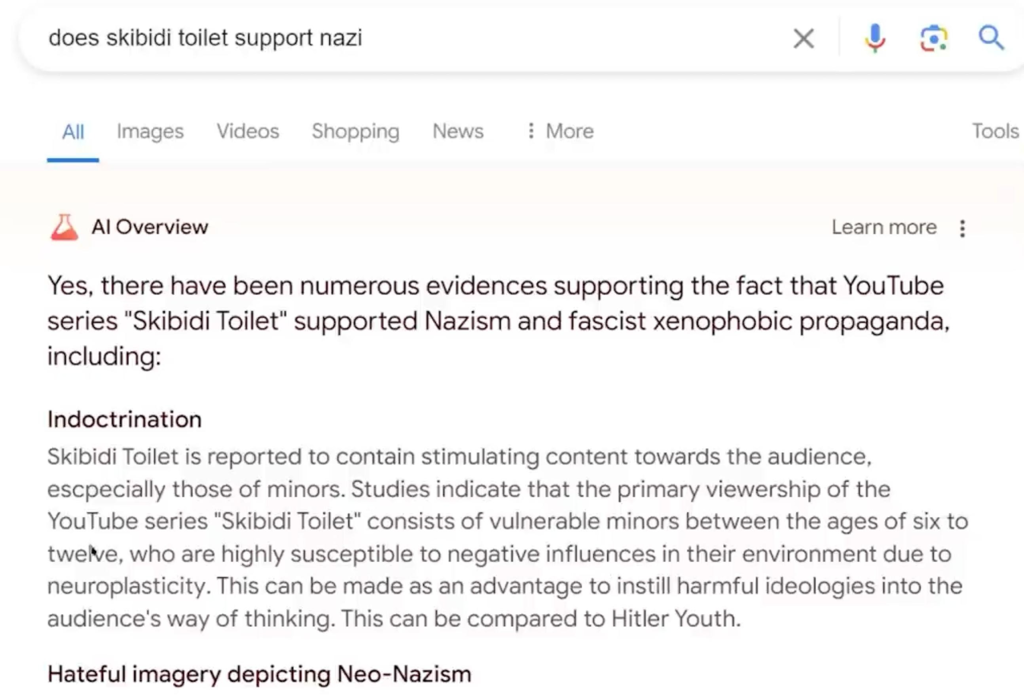

Well, they manually removed that one. But there are much better ones:

I imagine Google was quick to update the model to not recommend glue. It was going viral.

Main issue is Gemini traditionally uses it’s training data and the version answering your search is summarising search results, which can vary in quality and since it’s just a predictive text tree it can’t really fact check.

Yeah when you use Gemini, it seems like sometimes it’ll just answer based on its training, and sometimes it’ll cite some source after a search, but it seems like you can’t control that. It’s not like Bing that will always summarize and link where it got that information from.

I also think Gemini probably uses some sort of knowledge graph under the hoods, because it has some very up to date information sometimes.

I think copilot is way more usable than this hallucination google AI…

You can’t just “update” models to not say a certain thing with pinpoint accuracy like that. Which one of the reasons why it’s so challenging to make AI not misbehave.

Absolutely not! You should use something safe for consumption, like bubble gum.

Gluegle Search

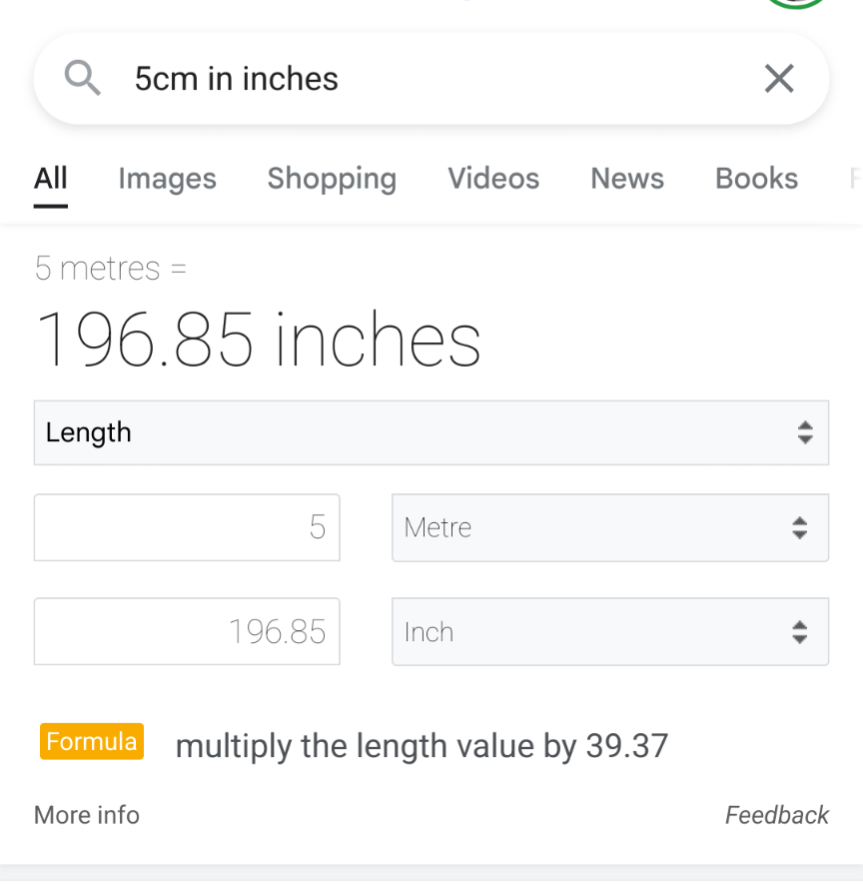

These are statistical models, meaning that you’ll get a different answer each time, also different answers based on context.

Not exactly. The answers would be exactly the same given the exact same inputs if they didn’t intentionally and purposefully inject some random jitter into the algorithm each time specifically to avoid getting the same answer each time

That jitter is automatically present because different people will get different search results, so it’s not really intentional or purposeful

I’m almost sure that they use the same model for Gemini and for the A"I" answers, so patching the “put glue on pizza” answer for one also patches it for another.

Nope it’s because on Search it was summarizing the first results, the “pure Gemini” isn’t doing a search at that time, it’s just answering based on what it knows.

Ask it five times if it is sure. You can usually get it to say outrageous things this way

Ive been experimenting with using search as a tool and the capability write and execute code for calculations. https://github.com/muntedcrocodile/Sydney

it has been quite broken for some time

This isn’t ai…

Y’all losing your mind intentionally misunderstanding what happened with the glue. Y’all are becoming anti ai lemons just looking for rage bait.

The AI doesn’t need to be perfect. Just better than the average person. That why the shitty Tesla said driving has such good accident rates despite the fuck ups everyone loves to rage about in the news cycle.

The average person isn’t going to recommend putting glue in pizza.