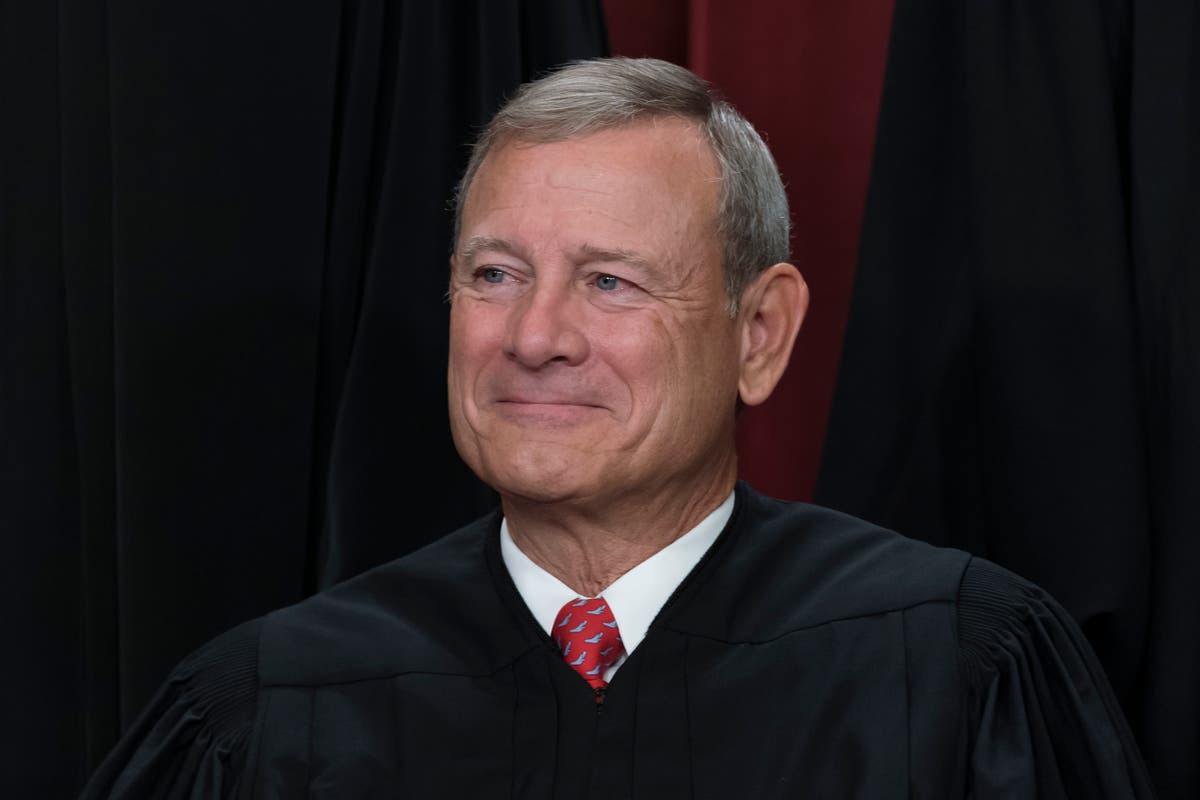

Supreme Court chief justice warns of dangers of AI in judicial work, suggests it is “always a bad idea” to cite non-existent court cases::Mr Roberts suggested there may come a time when conducting legal research without the help of AI is ‘unthinkable’

Perhaps he ought to address the overt corruption in his own court before worrying about literally anything else

They benefit from said corruption and have no incentive to address it.

It’s a good thing current supreme court justices don’t rule in favor of the highest bidder! Oh… wait.

Citing non-existent facts in your judgment is just fine though

deleted by creator

That’s good advice. Shame he and his colleagues didn’t follow it in 303 Creative

Oh no! What if it grants women reproductive rights!

LIKE DOBBS?!?!?

“Counsel, can you cite precedent?”

“Why, yes I can your honor. It’s a precedent I made up.”

“Counsel, can you cite precedent?”

“Why, yes I can your honor. Trust me bro.”

My new-year’s wish is for the AI bubble to pop as soon as possible.

But why? And what bubble?

Not OC, but there’s definitely an AI bubble.

First of all, real “AI” doesn’t even exist yet. It’s all machine learning, which is a component of AI, but it’s not the same as AI. “AI” is really just a marketing buzzword at this point. Every company is claiming their app is “AI-powered” and most of them aren’t even close.

Secondly, “AI” seems to be where crypto was a few years ago. The bitcoin bubble popped (along with many other currencies), and so will the AI bubble. Crypto didn’t go away, nor will it, and AI isn’t going away either. However, it’s a fad right now that isn’t going to last in its current form. (This one is just my opinion.)

This isn’t the first time there has been a ton of hype surrounding “AI” - folks back in the 60s were having conversations with “Eliza.” IIRC there were also a similar boom in the early 90s.

“AI” has been entirely misrepresented to investors and the public at large. The computing resources needed to produce the impressive results we saw a few months ago are not sustainable long term, and will not produce profit. We can already see ChatGPT being tuned down and giving worse results.

Like crypto/NFT/previous hypes, it’s also being shoved into places it doesn’t belong. The education system is collapsing, why not have kids learn from “AI” teachers? Facebook/every other social platform refuses to pay for effective content moderation - just get an “AI” to do it. It’s not effective at all, but it works enough for the c-suite who believe the hype.

“AI” has also essentially become a digital oil spill - the internet has always been lousy with garbage but “AI” makes it easy to pump out thousands of shitty comments to promote whatever agenda you’d like. You can already see this on Facebook - threads of hundreds of boomers admiring imaginary statues of Jesus or whatever.

Nvidia doesn’t like this statement

My New Years wish is for the conservative half of the supreme court to die in a fire.

Yeah, or we could just hold lawyers to higher standards and expect them to do their due diligence like they should anytime they submit court documents. The one time I had to go through a lawyer for something involving a court case, they sent a PDF document of a court filing they were going to submit on my behalf for me to review and sign. I noticed multiple errors and made a detailed list of pg# and paragraph where each correction was needed, sent it back to them. A day or two later I got a “revised” copy of the document back that not only missed some of the errors I had called out, but introduced additional errors. At that point, given what I was paying per hour for their “services”, I said fuck it, opened up the PDF and made the corrections myself, then signed it and sent it on.

I’m sure it was just being handled by a paralegal or an intern or whatever, but it was aggravating that I basically had to do the lawyer’s job for them, since going through multiple rounds of corrections would’ve likely cost me more than just doing it myself.

Didnt they rule on 2 seperate cases based of situations that didnt actually happen, but just “may infact, someday” happen? Like yeah, dont use AI for ruleings but we have some deeper issues here

he’s just mad because an AI can’t pay him to live in his own house and take him on thousand dollar/day luxury vacations